Microsoft DP-700 Implementing Data Engineering Solutions Using Microsoft Fabric Exam Practice Test

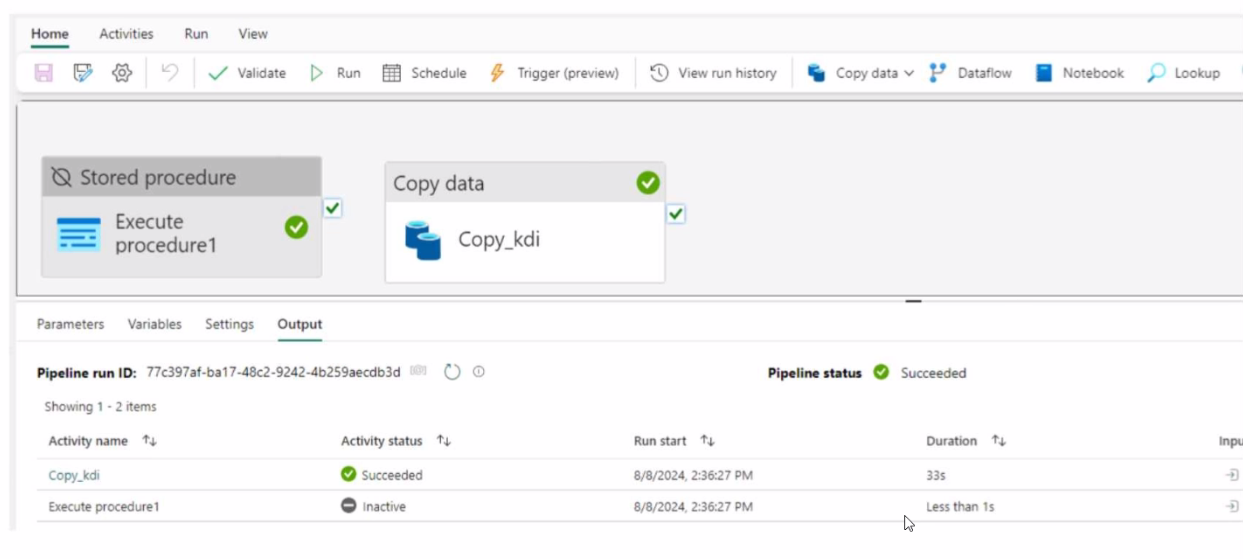

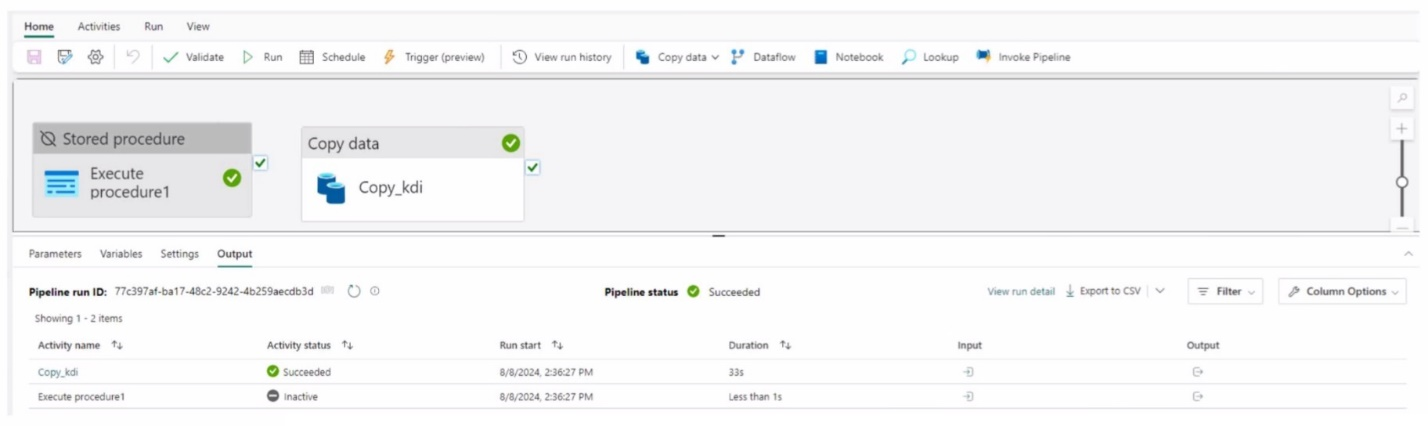

You have a Fabric workspace that contains a data pipeline named Pipeline! as shown in the exhibit.

Answer : A

Exhibit.

You have a Fabric workspace that contains a write-intensive warehouse named DW1. DW1 stores staging tables that are used to load a dimensional model. The tables are often read once, dropped, and then recreated to process new data.

You need to minimize the load time of DW1.

What should you do?

Answer : C

You have a Fabric workspace that contains a takehouse and a semantic model named Model1.

You use a notebook named Notebook1 to ingest and transform data from an external data source.

You need to execute Notebook1 as part of a data pipeline named Pipeline1. The process must meet the following requirements:

* Run daily at 07:00 AM UTC.

* Attempt to retry Notebook1 twice if the notebook fails.

* After Notebook1 executes successfully, refresh Model1.

Which three actions should you perform? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

Answer : A, C, E

You have a Fabric workspace that contains a lakehouse named Lakehousel.

You plan to create a data pipeline named Pipeline! to ingest data into Lakehousel. You will use a parameter named paraml to pass an external value into Pipeline1!. The paraml parameter has a data type of int

You need to ensure that the pipeline expression returns param1 as an int value.

How should you specify the parameter value?

Answer : B

You need to develop an orchestration solution in fabric that will load each item one after the other. The solution must be scheduled to run every 15 minutes. Which type of item should you use?

Answer : B

You have an Azure key vault named KeyVaultl that contains secrets.

You have a Fabric workspace named Workspace!. Workspace! contains a notebook named Notebookl that performs the following tasks:

* Loads stage data to the target tables in a lakehouse

* Triggers the refresh of a semantic model

You plan to add functionality to Notebookl that will use the Fabric API to monitor the semantic model refreshes. You need to retrieve the registered application ID and secret from KeyVaultl to generate the authentication token. Solution: You use the following code segment:

Use notebookutils. credentials.getSecret and specify key vault URL and the name of a linked service.

Does this meet the goal?

Answer : B

You have an Azure key vault named KeyVaultl that contains secrets.

You have a Fabric workspace named Workspace-!. Workspace! contains a notebook named Notebookl that performs the following tasks:

* Loads stage data to the target tables in a lakehouse

* Triggers the refresh of a semantic model

You plan to add functionality to Notebookl that will use the Fabric API to monitor the semantic model refreshes. You need to retrieve the registered application ID and secret from KeyVaultl to generate the authentication token.

Solution: You use the following code segment:

Use notebookutils.credentials.getSecret and specify the key vault URL and key vault secret. Does this meet the goal?

Answer : A