Google Professional Cloud Network Engineer Exam Questions

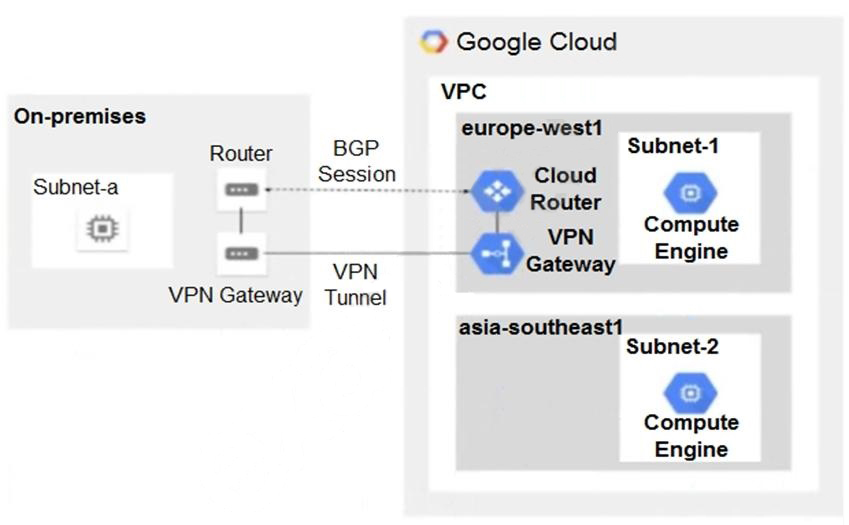

You have the following routing design. You discover that Compute Engine instances in Subnet-2 in the asia-southeast1 region cannot communicate with compute resources on-premises. What should you do?

Answer : C

Recently, your networking team enabled Cloud CDN for one of the external-facing services that is exposed through an external Application Load Balancer. The application team has already defined which content should be cached within the responses. Upon testing the load balancer, you did not observe any change in performance after the Cloud CDN enablement. You need to resolve the issue. What should you do?

Answer : B

When enabling Cloud CDN, for caching behavior to follow the application-defined caching headers, you need to configure the USE_ORIGIN_HEADERS caching mode. This setting ensures that the Cloud CDN respects the cache control headers specified by the backend, allowing the application-defined caching rules to dictate what content gets cached. This is often required when specific caching directives are already set by the application.

You are deploying GKE clusters in your organization's Google Cloud environment. The pods in these clusters need to egress directly to the internet for a majority of their communications. You need to deploy the clusters and associated networking features using the most cost-efficient approach, and following Google-recommended practices. What should you do?

Answer : A

For GKE pods that need to egress directly to the internet for most of their communications, the most cost-efficient and straightforward approach is to deploy a GKE cluster with public cluster nodes. Public nodes have external IP addresses, allowing pods to directly reach the internet. This eliminates the need for additional services like Cloud NAT or Secure Web Proxy for outbound internet access, which would incur extra costs and management overhead.

Exact Extract:

'Public clusters have nodes with external IP addresses, allowing them to directly initiate connections to the internet. This is the simplest configuration for clusters that require direct internet egress for their workloads.'

'When using public clusters, Cloud NAT is not required for outbound internet connectivity from the nodes or pods, as they can use their external IP addresses. This can reduce operational overhead and cost compared to private clusters that need NAT.'Reference: Google Kubernetes Engine Documentation - Cluster network configuration, Public clusters vs Private clusters

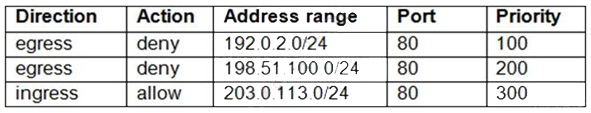

You have the following firewall ruleset applied to all instances in your Virtual Private Cloud (VPC):

You need to update the firewall rule to add the following rule to the ruleset:

You are using a new user account. You must assign the appropriate identity and Access Management (IAM) user roles to this new user account before updating the firewall rule. The new user account must be able to apply the update and view firewall logs. What should you do?

Answer : A

After a network change window one of your company's applications stops working. The application uses an on-premises database server that no longer receives any traffic from the application. The database server IP address is 10.2.1.25. You examine the change request, and the only change is that 3 additional VPC subnets were created. The new VPC subnets created are 10.1.0.0/16, 10.2.0.0/16, and 10.3.1.0/24/ The on-premises router is advertising 10.0.0.0/8.

What is the most likely cause of this problem?

Answer : B

You have a web application that is currently hosted in the us-central1 region. Users experience high latency when traveling in Asia. You've configured a network load balancer, but users have not experienced a performance improvement. You want to decrease the latency.

What should you do?

Answer : B

You want to use Cloud Interconnect to connect your on-premises network to a GCP VPC. You cannot meet Google at one of its point-of-presence (POP) locations, and your on-premises router cannot run a Border Gateway Protocol (BGP) configuration.

Which connectivity model should you use?

Answer : D

https://cloud.google.com/network-connectivity/docs/interconnect/concepts/partner-overview

For Layer 3 connections, your service provider establishes a BGP session between your Cloud Routers and their edge routers for each VLAN attachment. You don't need to configure BGP on your on-premises router. Google and your service provider automatically set the correct configurations.

You are configuring the final elements of a migration effort where resources have been moved from on-premises to Google Cloud. While reviewing the deployed architecture, you noticed that DNS resolution is failing when queries are being sent to the on-premises environment. You log in to a Compute Engine instance, try to resolve an on-premises hostname, and the query fails. DNS queries are not arriving at the on-premises DNS server. You need to use managed services to reconfigure Cloud DNS to resolve the DNS error. What should you do?

Answer : A

To resolve DNS resolution issues for on-premises domains from Google Cloud, you should use Cloud DNS outbound forwarding zones. This setup forwards DNS requests for specific domains to on-premises DNS servers. Cloud Router is needed to advertise the range for the DNS proxy service back to the on-premises environment, ensuring that DNS queries from Compute Engine instances reach the on-premises DNS servers.

You have configured a Compute Engine virtual machine instance as a NAT gateway. You execute the following command:

gcloud compute routes create no-ip-internet-route \

--network custom-network1 \

--destination-range 0.0.0.0/0 \

--next-hop instance nat-gateway \

--next-hop instance-zone us-central1-a \

--tags no-ip --priority 800

You want existing instances to use the new NAT gateway. Which command should you execute?

Answer : B

https://cloud.google.com/sdk/gcloud/reference/compute/routes/create

In order to apply a route to an existing instance we should use a tag to bind the route to it.

Your company's current network architecture has two VPCs that are connected by a dual-NIC instance that acts as a bump-in-the-wire firewall between the two VPCs. Flows between pairs of subnets across the two VPCs are working correctly. Suddenly, you receive an alert that none of the flows between the two VPCs are working anymore. You need to troubleshoot the problem. What should you do? (Choose 2 answers)

Answer : C, E

You should check Cloud Logging to see if any firewall rules or policies were modified, as these could block traffic between the VPCs. Additionally, the --can-ip-forward attribute must be enabled for the dual-NIC instance to allow forwarding traffic between the interfaces.

You have the networking configuration shown. In the diagram Two VLAN attachments associated With two Dedicated Interconnect connections terminate on the same Cloud Router (mycloudrouter). The Interconnect connections terminate on two separate on-premises routers. You advertise the same prefixes from the Border Gateway Protocol (BOP) sessions associated with each Of the VLAN attachments.

You notice an asymmetric traffic flow between the two Interconnect connections. Which of the following actions should you take to troubleshoot the asymmetric traffic flow?

Answer : A

The correct answer is B. From the Cloud CLI, run gcloud compute --project_ID router get-status mycloudrouter --region REGION and review the results.

This command will show you the BGP session status, the advertised and learned routes, and the last error for each VLAN attachment. You can use this information to troubleshoot the asymmetric traffic flow and identify any issues with the BGP configuration or the Interconnect connections.

The other options are not correct because:

Option A will only show you the BGP session status, but not the advertised and learned routes or the last error for each VLAN attachment.

Option C will only show you the VPC Flow Logs, which are useful for monitoring and troubleshooting network performance and security issues within your VPC network, but not for your Interconnect connections.

Option D will only show you the basic information about the Cloud Router, such as its name, region, network, and BGP settings, but not the detailed status of each VLAN attachment.

One instance in your VPC is configured to run with a private IP address only. You want to ensure that even if this instance is deleted, its current private IP address will not be automatically assigned to a different instance.

In the GCP Console, what should you do?

Answer : C

https://cloud.google.com/compute/docs/ip-addresses/reserve-static-internal-ip-address#reservenewip Since here https://cloud.google.com/compute/docs/ip-addresses/reserve-static-internal-ip-address#reservenewip it is written that 'automatically allocated or an unused address from an existing subnet'.

Your company is working with a partner to provide a solution for a customer. Both your company and the partner organization are using GCP. There are applications in the partner's network that need access to some resources in your company's VPC. There is no CIDR overlap between the VPCs.

Which two solutions can you implement to achieve the desired results without compromising the security? (Choose two.)

Answer : A, C

Google Cloud VPC Network Peering allows internal IP address connectivity across two Virtual Private Cloud (VPC) networks regardless of whether they belong to the same project or the same organization.

You have deployed an HTTP(s) load balancer, but health checks to port 80 on the Compute Engine virtual machine instance are failing, and no traffic is sent to your instances. You want to resolve the problem. Which commands should you run?

Answer : A

Your company has a single Virtual Private Cloud (VPC) network deployed in Google Cloud with access from on-premises locations using Cloud Interconnect connections. Your company must be able to send traffic to Cloud Storage only through the Interconnect links while accessing other Google APIs and services over the public internet. What should you do?

Answer : B

Your company's web server administrator is migrating on-premises backend servers for an application to GCP. Libraries and configurations differ significantly across these backend servers. The migration to GCP will be lift-and-shift, and all requests to the servers will be served by a single network load balancer frontend. You want to use a GCP-native solution when possible.

How should you deploy this service in GCP?

Answer : B

You suspect that one of the virtual machines (VMs) in your default Virtual Private Cloud (VPC) is under a denial-of-service attack. You need to analyze the incoming traffic for the VM to understand where the traffic is coming from. What should you do?

Answer : B

Your company has recently expanded their EMEA-based operations into APAC. Globally distributed users report that their SMTP and IMAP services are slow. Your company requires end-to-end encryption, but you do not have access to the SSL certificates.

Which Google Cloud load balancer should you use?

Answer : D

https://cloud.google.com/security/encryption-in-transit/ Automatic encryption between GFEs and backends For the following load balancer types, Google automatically encrypts traffic between Google Front Ends (GFEs) and your backends that reside within Google Cloud VPC networks: HTTP(S) Load Balancing TCP Proxy Load Balancing SSL Proxy Load Balancing

You are creating an instance group and need to create a new health check for HTTP(s) load balancing.

Which two methods can you use to accomplish this? (Choose two.)

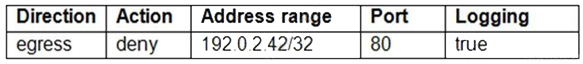

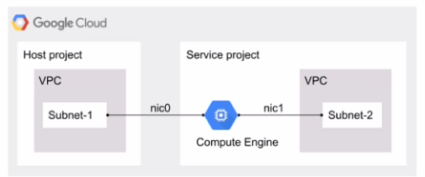

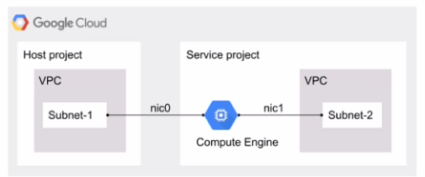

You have the following Shared VPC design VPC Flow Logs is configured for Subnet-1 In the host VPC. You also want to monitor flow logs for Subnet-2. What should you do?

Answer : D

Understanding VPC Flow Logs:

VPC Flow Logs is a feature that captures information about the IP traffic going to and from network interfaces in a VPC. It helps in monitoring and analyzing network traffic, ensuring security, and optimizing network performance.

Current Configuration:

According to the diagram, VPC Flow Logs is already configured for Subnet-1 in the host VPC. This means that traffic information for Subnet-1 is being captured and logged.

Requirement for Subnet-2:

The goal is to monitor flow logs for Subnet-2, which is in the service project VPC.

Correct Configuration for Subnet-2:

To monitor the flow logs for Subnet-2, you need to configure VPC Flow Logs within the service project VPC where Subnet-2 resides. This is because VPC Flow Logs must be configured in the same project and VPC where the subnet is located.

Implementation Steps:

Go to the Google Cloud Console.

Navigate to the service project where Subnet-2 is located.

Select the VPC network containing Subnet-2.

Enable VPC Flow Logs for Subnet-2 by editing the subnet settings and enabling the flow logs option.

Cost and Performance Considerations:

Enabling VPC Flow Logs may incur additional costs based on the volume of data logged. Ensure to review and understand the pricing implications.

Analyze and manage the data collected to avoid unnecessary logging and costs.

References:

Google Cloud VPC Flow Logs Documentation

Configuring VPC Flow Logs

Shared VPC Overview

By configuring VPC Flow Logs in the service project VPC for Subnet-2, you ensure that traffic data is correctly captured and monitored, adhering to Google Cloud's best practices.

You configured Cloud VPN with dynamic routing via Border Gateway Protocol (BGP). You added a custom route to advertise a network that is reachable over the VPN tunnel. However, the on-premises clients still cannot reach the network over the VPN tunnel. You need to examine the logs in Cloud Logging to confirm that the appropriate routers are being advertised over the VPN tunnel. Which filter should you use in Cloud Logging to examine the logs?

Answer : C

Your company is running out of network capacity to run a critical application in the on-premises data center. You want to migrate the application to GCP. You also want to ensure that the Security team does not lose their ability to monitor traffic to and from Compute Engine instances.

Which two products should you incorporate into the solution? (Choose two.)

All the instances in your project are configured with the custom metadata enable-oslogin value set to FALSE and to block project-wide SSH keys. None of the instances are set with any SSH key, and no project-wide SSH keys have been configured. Firewall rules are set up to allow SSH sessions from any IP address range. You want to SSH into one instance.

What should you do?

Answer : A

Your organization is running out of private IPv4 IP addresses. You need to create a new design pattern to reduce IP usage in your Google Kubernetes Engine clusters. Each new GKE cluster should have a unique /24 range of routable RFC1918 IP addresses. What should you do?

Answer : C

The most effective long-term solution to address IPv4 address exhaustion in GKE clusters, while still ensuring routability and unique ranges per cluster, is to transition to dual-stack IPv4/IPv6 clusters and leverage IPv6 for Pods and Services. This allows you to conserve IPv4 addresses for critical use cases while providing a vast address space with IPv6 for pods and services, significantly reducing the pressure on your private IPv4 ranges. Google Cloud GKE fully supports dual-stack networking.

Exact Extract:

'Dual-stack clusters enable you to assign both IPv4 and IPv6 addresses to Pods and Services. This approach helps conserve IPv4 address space by shifting a significant portion of the network communication to IPv6, particularly for internal cluster communication or communication with other IPv6-enabled services.'

You are designing an IP address scheme for new private Google Kubernetes Engine (GKE) clusters, Due to IP address exhaustion of the RFC 1918 address space in your enterprise, you plan to use privately used public IP space for the new dusters. You want to follow Google-recommended practices, What should you do after designing your IP scheme?

Answer : D

The correct answer is D. Create privately used public IP primary and secondary subnet ranges for the clusters. Create a private GKE cluster with the following options selected: --disable-default-snat, --enable-ip-alias, and --enable-private-nodes.

This answer is based on the following facts:

The other options are not correct because:

Option A is not suitable. Creating RFC 1918 primary and secondary subnet IP ranges for the clusters does not solve the problem of address exhaustion. Re-using the secondary address range for pods across multiple private GKE clusters can cause IP conflicts and routing issues.

Option B is also not suitable. Creating RFC 1918 primary and secondary subnet IP ranges for the clusters does not solve the problem of address exhaustion. Re-using the secondary address range for services across multiple private GKE clusters can cause IP conflicts and routing issues.

Option C is not feasible. Creating privately used public IP primary and secondary subnet ranges for the clusters is a valid step, but creating a private GKE cluster with only --enable-ip-alias and --enable-private-nodes options is not enough. You also need to disable default SNAT to avoid IP conflicts with other public IP addresses on the internet.

Your organization has a single project that contains multiple Virtual Private Clouds (VPCs). You need to secure API access to your Cloud Storage buckets and BigQuery datasets by allowing API access only from resources in your corporate public networks. What should you do?

Answer : B

Your company runs an enterprise platform on-premises using virtual machines (VMS). Your internet customers have created tens of thousands of DNS domains panting to your public IP addresses allocated to the Vtvls Typically, your customers hard-code your IP addresses In their DNS records You are now planning to migrate the platform to Compute Engine and you want to use Bring your Own IP you want to minimize disruption to the Platform What Should you d0?

Answer : D

The correct answer is D because it allows you to use your own public IP addresses in Google Cloud without disrupting the platform or requiring your customers to update their DNS records. Option A is incorrect because it involves changing the IP addresses and notifying the customers, which can cause disruption and errors. Option B is incorrect because it does not use live migration, which is a feature that lets you control when Google starts advertising routes for your prefix. Option C is incorrect because it does not involve bringing your own IP addresses, but rather using Google-provided IP addresses.

References:

Professional Cloud Network Engineer Exam Guide

Bring your own IP addresses (BYOIP) to Azure with Custom IP Prefix

You need to enable Cloud CDN for all the objects inside a storage bucket. You want to ensure that all the object in the storage bucket can be served by the CDN.

What should you do in the GCP Console?

Your multi-region VPC has had a long-standing HA VPN configured in "region 1" connected to your corporate network. You are planning to add two 10 Gbps Dedicated Interconnect connections and VLAN attachments in "region 2" to connect to the same corporate network. You need to plan for connectivity between your VPC and corporate network to ensure that traffic uses the Dedicated Interconnect connections as the primary path and the HA VPN as the secondary path. What should you do?

Answer : B

For the Dedicated Interconnect to be the primary connection over the HA VPN, you should:

Enable global dynamic routing mode to allow the VPC to distribute routes dynamically across regions.

Set the BGP priority for the VLAN attachments associated with the Dedicated Interconnect to a lower base priority (e.g., 100) than the HA VPN's priority (e.g., 20000) to ensure it is preferred.

Setting up global dynamic routing with adjusted BGP priorities on both Interconnect and VPN will allow dynamic routing of traffic based on set preferences and path attributes, such as MED and priority levels. This setup ensures the Dedicated Interconnect, with a lower priority value, becomes the primary path for traffic, while the HA VPN, with a higher priority, serves as a backup.

Your company recently migrated to Google Cloud in a Single region. You configured separate Virtual Private Cloud (VPC) networks for two departments. Department A and Department B. Department A has requested access to resources that are part Of Department Bis VPC. You need to configure the traffic from private IP addresses to flow between the VPCs using multi-NIC virtual machines (VMS) to meet security requirements Your configuration also must

* Support both TCP and UDP protocols

* Provide fully automated failover

* Include health-checks

Require minimal manual Intervention In the client VMS

Which approach should you take?

Answer : D

The correct answer is D. Create an instance template and a managed instance group. Configure two separate internal TCP/UDP load balancers for each protocol (TCP/UDP), and configure the client VMs to use the internal load balancers' virtual IP addresses.

This answer is based on the following facts:

The other options are not correct because:

Option A is not suitable. Creating the VMs in the same zone does not provide high availability or failover. Using static routes with IP addresses as next hops requires manual intervention when NVAs are added or removed.

Option B is not optimal. Creating the VMs in different zones provides high availability, but not failover. Using static routes with instance names as next hops requires manual intervention when NVAs are added or removed.

Option C is not feasible. Creating an instance template and a managed instance group provides high availability and reliability, but using a single internal load balancer does not support both TCP and UDP protocols. You cannot define a custom static route with an internal load balancer as the next hop.

Your team deployed two applications in GKE that are exposed through an external Application Load Balancer. When queries are sent to www.mountkirkgames.com/sales and www.mountkirkgames.com/get-an-analysis, the correct pages are displayed. However, you have received complaints that www.mountkirkgames.com yields a 404 error. You need to resolve this error. What should you do?

Answer : A

The 404 error is occurring because there is no default backend defined for requests to the root URL. Defining the default backend in the Ingress YAML file ensures that requests to www.mountkirkgames.com are routed to the correct service.

You have an application that is running in a managed instance group. Your development team has released an updated instance template which contains a new feature which was not heavily tested. You want to minimize impact to users if there is a bug in the new template.

How should you update your instances?

Your company has defined a resource hierarchy that includes a parent folder with subfolders for each department. Each department defines their respective project and VPC in the assigned folder and has the appropriate permissions to create Google Cloud firewall rules. The VPCs should not allow traffic to flow between them. You need to block all traffic from any source, including other VPCs, and delegate only the intra-VPC firewall rules to the respective departments. What should you do?

Answer : B

You have deployed an HTTP(s) load balancer, but health checks to port 80 on the Compute Engine virtual machine instance are failing, and no traffic is sent to your instances. You want to resolve the problem. Which commands should you run?

Answer : A

You have the following Shared VPC design VPC Flow Logs is configured for Subnet-1 In the host VPC. You also want to monitor flow logs for Subnet-2. What should you do?

Answer : D

Understanding VPC Flow Logs:

VPC Flow Logs is a feature that captures information about the IP traffic going to and from network interfaces in a VPC. It helps in monitoring and analyzing network traffic, ensuring security, and optimizing network performance.

Current Configuration:

According to the diagram, VPC Flow Logs is already configured for Subnet-1 in the host VPC. This means that traffic information for Subnet-1 is being captured and logged.

Requirement for Subnet-2:

The goal is to monitor flow logs for Subnet-2, which is in the service project VPC.

Correct Configuration for Subnet-2:

To monitor the flow logs for Subnet-2, you need to configure VPC Flow Logs within the service project VPC where Subnet-2 resides. This is because VPC Flow Logs must be configured in the same project and VPC where the subnet is located.

Implementation Steps:

Go to the Google Cloud Console.

Navigate to the service project where Subnet-2 is located.

Select the VPC network containing Subnet-2.

Enable VPC Flow Logs for Subnet-2 by editing the subnet settings and enabling the flow logs option.

Cost and Performance Considerations:

Enabling VPC Flow Logs may incur additional costs based on the volume of data logged. Ensure to review and understand the pricing implications.

Analyze and manage the data collected to avoid unnecessary logging and costs.

References:

Google Cloud VPC Flow Logs Documentation

Configuring VPC Flow Logs

Shared VPC Overview

By configuring VPC Flow Logs in the service project VPC for Subnet-2, you ensure that traffic data is correctly captured and monitored, adhering to Google Cloud's best practices.