Databricks-Machine-Learning-Associate Databricks Certified Machine Learning Associate Exam Practice Test

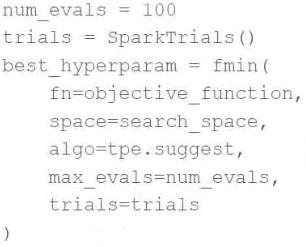

A data scientist wants to tune a set of hyperparameters for a machine learning model. They have wrapped a Spark ML model in the objective function objective_function and they have defined the search space search_space.

As a result, they have the following code block:

Which of the following changes do they need to make to the above code block in order to accomplish the task?

Answer : A

The SparkTrials() is used to distribute trials of hyperparameter tuning across a Spark cluster. If the environment does not support Spark or if the user prefers not to use distributed computing for this purpose, switching to Trials() would be appropriate. Trials() is the standard class for managing search trials in Hyperopt but does not distribute the computation. If the user is encountering issues with SparkTrials() possibly due to an unsupported configuration or an error in the cluster setup, using Trials() can be a suitable change for running the optimization locally or in a non-distributed manner.

Reference

Hyperopt documentation: http://hyperopt.github.io/hyperopt/

Which of the following is a benefit of using vectorized pandas UDFs instead of standard PySpark UDFs?

Answer : B

Vectorized pandas UDFs, also known as Pandas UDFs, are a powerful feature in PySpark that allows for more efficient operations than standard UDFs. They operate by processing data in batches, utilizing vectorized operations that leverage pandas to perform operations on whole batches of data at once. This approach is much more efficient than processing data row by row as is typical with standard PySpark UDFs, which can significantly speed up the computation.

Reference

A machine learning engineer is trying to scale a machine learning pipeline by distributing its feature engineering process.

Which of the following feature engineering tasks will be the least efficient to distribute?

Answer : D

Among the options listed, calculating the true median for imputing missing feature values is the least efficient to distribute. This is because the true median requires knowledge of the entire data distribution, which can be computationally expensive in a distributed environment. Unlike mean or mode, finding the median requires sorting the data or maintaining a full distribution, which is more intensive and often requires shuffling the data across partitions.

Reference

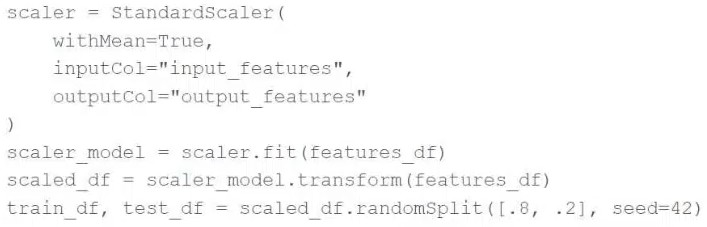

A data scientist is using Spark ML to engineer features for an exploratory machine learning project.

They decide they want to standardize their features using the following code block:

Upon code review, a colleague expressed concern with the features being standardized prior to splitting the data into a training set and a test set.

Which of the following changes can the data scientist make to address the concern?

Answer : E

To address the concern about standardizing features prior to splitting the data, the correct approach is to use the Pipeline API to ensure that only the training data's summary statistics are used to standardize the test data. This is achieved by fitting the StandardScaler (or any scaler) on the training data and then transforming both the training and test data using the fitted scaler. This approach prevents information leakage from the test data into the model training process and ensures that the model is evaluated fairly. Reference:

Best Practices in Preprocessing in Spark ML (Handling Data Splits and Feature Standardization).

Which of the Spark operations can be used to randomly split a Spark DataFrame into a training DataFrame and a test DataFrame for downstream use?

Answer : E

The correct method to randomly split a Spark DataFrame into training and test sets is by using the randomSplit method. This method allows you to specify the proportions for the split as a list of weights and returns multiple DataFrames according to those weights. This is directly intended for splitting DataFrames randomly and is the appropriate choice for preparing data for training and testing in machine learning workflows. Reference:

Apache Spark DataFrame API documentation (DataFrame Operations: randomSplit).

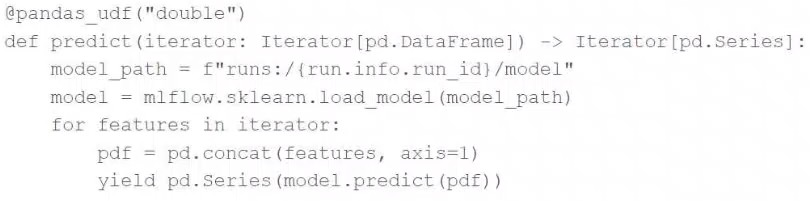

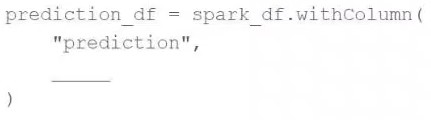

A data scientist has defined a Pandas UDF function predict to parallelize the inference process for a single-node model:

They have written the following incomplete code block to use predict to score each record of Spark DataFrame spark_df:

Which of the following lines of code can be used to complete the code block to successfully complete the task?

Answer : B

To apply the Pandas UDF predict to each record of a Spark DataFrame, you use the mapInPandas method. This method allows the Pandas UDF to operate on partitions of the DataFrame as pandas DataFrames, applying the specified function (predict in this case) to each partition. The correct code completion to execute this is simply mapInPandas(predict), which specifies the UDF to use without additional arguments or incorrect function calls. Reference:

PySpark DataFrame documentation (Using mapInPandas with UDFs).

A data scientist has written a data cleaning notebook that utilizes the pandas library, but their colleague has suggested that they refactor their notebook to scale with big data.

Which of the following approaches can the data scientist take to spend the least amount of time refactoring their notebook to scale with big data?

Answer : E

The data scientist can refactor their notebook to utilize the pandas API on Spark (now known as pandas on Spark, formerly Koalas). This allows for the least amount of changes to the existing pandas-based code while scaling to handle big data using Spark's distributed computing capabilities. pandas on Spark provides a similar API to pandas, making the transition smoother and faster compared to completely rewriting the code to use PySpark DataFrame API, Scala Dataset API, or Spark SQL. Reference:

Databricks documentation on pandas API on Spark (formerly Koalas).