Databricks-Certified-Data-Engineer-Associate Databricks Certified Data Engineer Associate Exam Practice Test

A new data engineering team team has been assigned to an ELT project. The new data engineering team will need full privileges on the table sales to fully manage the project.

Which command can be used to grant full permissions on the database to the new data engineering team?

Answer : A

To grant full privileges on a table such as 'sales' to a group like 'team', the correct SQL command in Databricks is:

GRANT ALL PRIVILEGES ON TABLE sales TO team;

This command assigns all available privileges, including SELECT, INSERT, UPDATE, DELETE, and any other data manipulation or definition actions, to the specified team. This is typically necessary when a team needs full control over a table to manage and manipulate it as part of a project or ongoing maintenance.

Reference: Databricks documentation on SQL permissions: SQL Permissions in Databricks

A data engineer has a Python variable table_name that they would like to use in a SQL query. They want to construct a Python code block that will run the query using table_name.

They have the following incomplete code block:

____(f"SELECT customer_id, spend FROM {table_name}")

Which of the following can be used to fill in the blank to successfully complete the task?

A data engineer has joined an existing project and they see the following query in the project repository:

CREATE STREAMING LIVE TABLE loyal_customers AS

SELECT customer_id -

FROM STREAM(LIVE.customers)

WHERE loyalty_level = 'high';

Which of the following describes why the STREAM function is included in the query?

A data engineer is attempting to drop a Spark SQL table my_table and runs the following command:

DROP TABLE IF EXISTS my_table;

After running this command, the engineer notices that the data files and metadata files have been deleted from the file system.

Which of the following describes why all of these files were deleted?

Answer : A

The reason why all of the data files and metadata files were deleted from the file system after dropping the table is that the table was managed. A managed table is a table that is created and managed by Spark SQL. It stores both the data and the metadata in the default location specified by thespark.sql.warehouse.dirconfiguration property. When a managed table is dropped, both the data and the metadata are deleted from the file system.

Option B is not correct, as the size of the table's data does not affect the behavior of dropping the table. Whether the table's data is smaller or larger than 10 GB, the data files and metadata files will be deleted if the table is managed, and will be preserved if the table is external.

Option C is not correct, for the same reason as option B.

Option D is not correct, as an external table is a table that is created and managed by the user. It stores the data in a user-specified location, and only stores the metadata in the Spark SQL catalog. When an external table is dropped, only the metadata is deleted from the catalog, but the data files are preserved in the file system.

Option E is not correct, as a table must have a location to store the data. If the location is not specified by the user, it will use the default location for managed tables. Therefore, a table without a location is a managed table, and dropping it will delete both the data and the metadata.

[Databricks Data Engineer Professional Exam Guide]

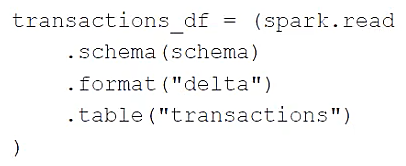

A data engineer is using the following code block as part of a batch ingestion pipeline to read from a composable table:

Which of the following changes needs to be made so this code block will work when the transactions table is a stream source?

A data engineer has developed a data pipeline to ingest data from a JSON source using Auto Loader, but the engineer has not provided any type inference or schema hints in their pipeline. Upon reviewing the data, the data engineer has noticed that all of the columns in the target table are of the string type despite some of the fields only including float or boolean values.

Which of the following describes why Auto Loader inferred all of the columns to be of the string type?

In which of the following scenarios should a data engineer use the MERGE INTO command instead of the INSERT INTO command?