Amazon DAS-C01 AWS Certified Data Analytics - Specialty Exam Practice Test

A company's data science team is designing a shared dataset repository on a Windows server. The data repository will store a large amount of training data that the data

science team commonly uses in its machine learning models. The data scientists create a random number of new datasets each day.

The company needs a solution that provides persistent, scalable file storage and high levels of throughput and IOPS. The solution also must be highly available and must

integrate with Active Directory for access control.

Which solution will meet these requirements with the LEAST development effort?

Answer : B

A company is using an AWS Lambda function to run Amazon Athena queries against a cross-account AWS Glue Data Catalog. A query returns the following error:

HIVE METASTORE ERROR

The error message states that the response payload size exceeds the maximum allowed payload size. The queried table is already partitioned, and the data is stored in an

Amazon S3 bucket in the Apache Hive partition format.

Which solution will resolve this error?

Answer : A

A social media company is using business intelligence tools to analyze data for forecasting. The company is using Apache Kafka to ingest dat

a. The company wants to build dynamic dashboards that include machine learning (ML) insights to forecast key business trends.

The dashboards must show recent batched data that is not more than 75 minutes old. Various teams at the company want to view the dashboards by using Amazon QuickSight with ML insights.

Which solution will meet these requirements?

Answer : C

A company has a process that writes two datasets in CSV format to an Amazon S3 bucket every 6 hours. The company needs to join the datasets, convert the data to Apache Parquet, and store the data within another bucket for users to query using Amazon Athen

a. The data also needs to be loaded to Amazon Redshift for advanced analytics. The company needs a solution that is resilient to the failure of any individual job component and can be restarted in case of an error.

Which solution meets these requirements with the LEAST amount of operational overhead?

Answer : D

AWS Glue provides dynamic frames, which are an extension of Apache Spark data frames. Dynamic frames handle schema variations and errors in the data more easily than data frames. They also provide a set of transformations that can be applied to the data, such as join, filter, map, etc.

AWS Glue provides workflows, which are directed acyclic graphs (DAGs) that orchestrate multiple ETL jobs and crawlers. Workflows can handle dependencies, retries, error handling, and concurrency for ETL jobs and crawlers. They can also be triggered by schedules or events.

By creating an AWS Glue job using PySpark that creates dynamic frames of the datasets in Amazon S3, transforms the data, joins the data, writes the data back to Amazon S3, and loads the data to Amazon Redshift, the company can perform the required ETL tasks with a single job. By using an AWS Glue workflow to orchestrate the AWS Glue job, the company can schedule and monitor the job execution with minimal operational overhead.

A manufacturing company is storing data from its operational systems in Amazon S3. The company's business analysts need to perform one-time queries of the data in Amazon S3 with Amazon Athen

a. The company needs to access the Athena service from the on-premises network by using a JDBC connection. The company has created a VPC. Security policies mandate that requests to AWS services cannot traverse the internet.

Which combination of steps should a data analytics specialist take to meet these requirements? (Select TWO.)

Answer : A, D

A healthcare company ingests patient data from multiple data sources and stores it in an Amazon S3 staging bucket. An AWS Glue ETL job transforms the data, which is written to an S3-based data lake to be queried using Amazon Athen

a. The company wants to match patient records even when the records do not have a common unique identifier.

Which solution meets this requirement?

Answer : D

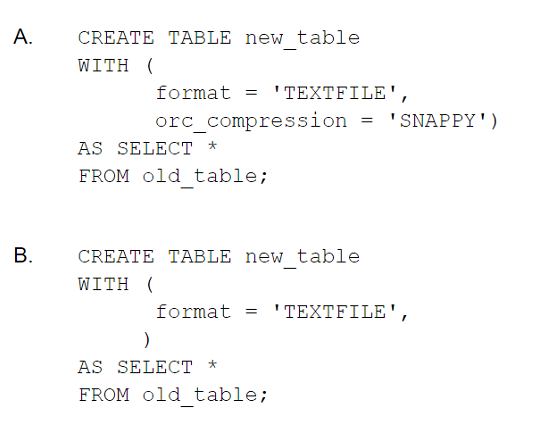

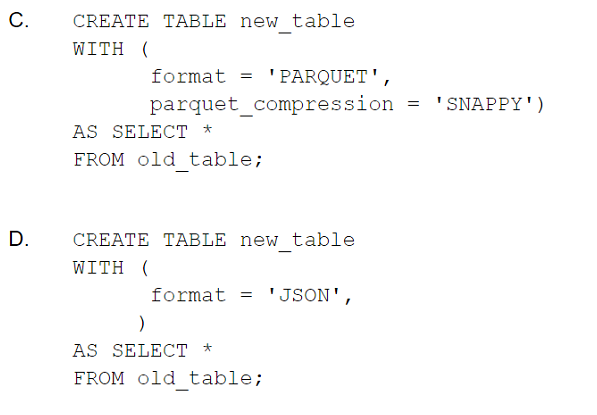

A data analytics specialist has a 50 GB data file in .csv format and wants to perform a data transformation task. The data analytics specialist is using the Amazon Athena CREATE TABLE AS SELECT (CTAS) statement to perform the transformation. The resulting output will be used to query the data from Amazon Redshift Spectrum.

Which CTAS statement should the data analytics specialist use to provide the MOST efficient performance?

Answer : B